What are Adaptive Clinical Trials?

There have been various definitions of adaptive clinical trial designs over the last 10 years but the one below, coming from the FDA draft guidance on the topic, covers it pretty well:

“A clinical trial design that allows for prospectively planned modifications to one or more aspects of the design based on accumulating data from subjects in the trial.” [1]

In simple terms, it means that we anticipate that some features of the study (e.g. sample size, treatment arms, population of interest, etc) might change during the course of the study (i.e. after randomization), according to a pre-specified set of rules. Notably, the key term in the above definition is ‘prospectively planned’: not all planned modifications are legitimate and safeguard trial integrity and interpretability, however unplanned changes will never be considered acceptable and will result in the study results being discarded as not valid.

What are the Goals of an Adaptive Trial Design?

The goal of an adaptive trial design is to learn from the accumulated data to increase efficiency and to maximize the chances of success. As inferred from above, though, adaptation is a design feature aimed to enhance the trial, not a remedy for inadequate planning.

This greater flexibility within the adaptive design framework typically leads to a better use of available resources (e.g. by dropping an ineffective treatment arm), yet it comes at the cost of a more time-consuming planning stage. So, whilst adaptation can be an appealing property to fit into a trial, it is to be understood that devising an adaptive rule is a multi-stage process that should include all relevant stakeholders (medical experts, statisticians, etc) and that should be well understood by everyone involved in order to achieve the targeted objective.

Why do we need Adaptive Clinical Trials?

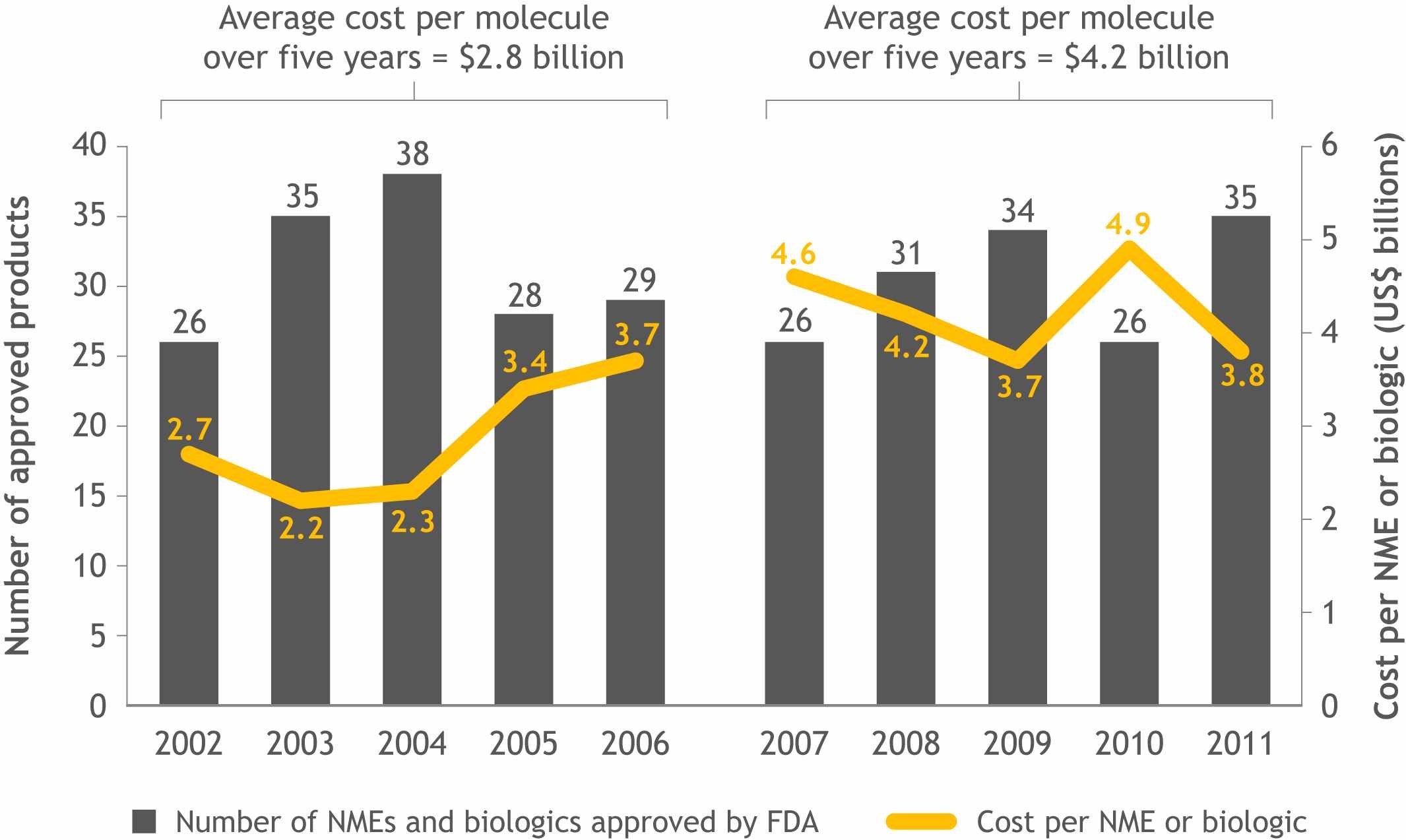

The industry is under an increasing pressure to achieve ‘more with less’, however the current trend is quite the opposite: despite an increase in clinical development costs the number of products making it to the marker hasn’t quite gone in the same direction, but rather the opposite (see Figure 1).

[Figure 1]

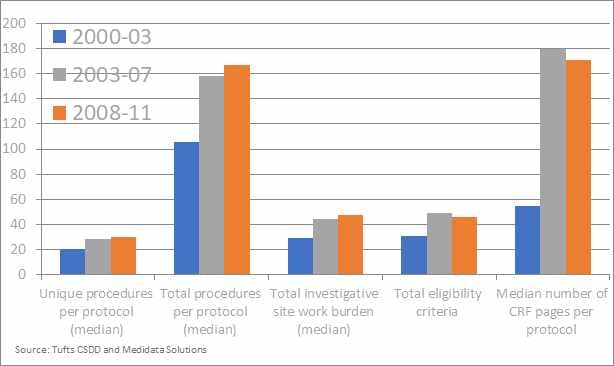

Another key motivation that urged (or at least could/should urge) drug developers to consider adaptive trials is a more practical one, linked to the impact on patients and investigators. In Figure 2 the median/total number of study procedures as well as another indicators of trials complexity are reported for the first decade of this century. Whilst these data might be a bit old, we can reasonably expect that the trend will have at best leveled off, implying that both the investigational sites and the patients do face quite a considerable challenge in dealing with the operational complexity of the trial. As a matter of fact, it is not uncommon to have patients withdrawing from the trial because they do not want to undergo study procedures anymore. In this sense, whilst adaptive trials per se can’t reduce the number of needed procedures, they can on the other hand reduce the number of patients that need to undergo the procedure or reduce the overall trial duration, thus leading in advantages for both actors involved.

[Figure 2] – Rising cost and complexity

Is this a Good Time for ‘Adapting’ our Trials?

With the rise of new emerging techniques for data capture, the amount of data collected has spiraled, and this ultimately implies that we’ve got a golden ticket to make better informed decisions on a more timely manner based on a larger and potentially more accurately collected amount of data.

In line with the above, over the past 15 years the industry has made great leaps in trying to fill the gap between the traditional monolyte-like RCT and the flexibility of adaptive trials. Back in 2004, the FDA’s critical path initiative (CPI) was released to encourage drug developers to drive innovations in the scientific process through which medical products are developed, evaluated and manufactured [2].

In addition, in 2005 the Pharmaceutical research and Manufacturers of America (PhRMA) formed a Working Group with the aim of bringing together different stakeholders in the pharmaceutical industry to trigger a discussion on the topic. This resulted in a white paper where the key aspects of adaptive trials (i.e. statistical/logistical issues as well as opportunities and recommendations) were discussed, with the view of ensuring that this type of trials could ‘find their way into the mainstreams of designs for pivotal confirmatory trials.’ [3,4]

A Range of Roles for Adaptive Clinical Trials

The definition of adaptive trials taken from the FDA guidance and reported at the beginning of this blog is, indeed, very vague, since it broadly refers to ‘adaptation’ without specifying any real limit to its the potential scope.

In fact, there are a number of ways to adapt your study which include (but are not limited to):

- Study eligibility criteria (either for subsequent study enrollment or for a subset selection of an analytic population)

- Randomization procedure

- Treatment regimens of the different study groups (e.g., dose level, schedule, duration)

- Total sample size of the study

- Concomitant treatments used

- Planned schedule of patient evaluations for data collection (e.g., number of intermediate timepoints, timing of last patient observation and duration of patient study participation)

- Primary endpoint (e.g., which of several types of outcome assessments, which timepoint of assessment, use of a unitary versus composite endpoint or the components included in a composite endpoint)

- Selection and/or order of secondary endpoints

- Analytics methods to evaluate the endpoints (e.g., covariates of final analysis, statistical methodology, type 1 error control)

It is also interesting to note that, as hinted at in [4], even though a study is not explicitly labelled as adaptive that doesn’t mean it can’t be viewed as such. Group sequential trials, in fact, embed stopping rules that are pre-defined and imply a change in the study conduct (i.e. early stopping) if met. Similarly, dose escalation studies technically use the accumulation of data to make decisions about the future of the study and could thus be argued to be adaptive-like trials if we go by the definition introduced above.

As it will be clear by now, the very reason of existence for adaptive trials is to allow to make better decisions in a more effective and efficient manner. That applies to all kind of decisions: positive (the drug works) and negative ones (the drug doesn’t work). In fact, one of the first adaptive trials reported in drug development can be considered a success because it allowed early termination due to lack of efficacy, thus preventing an ineffective treatment to be tested for a long time with no actual benefit for patients[5].

4 Core Questions of Adaptive Clinical Trials Process

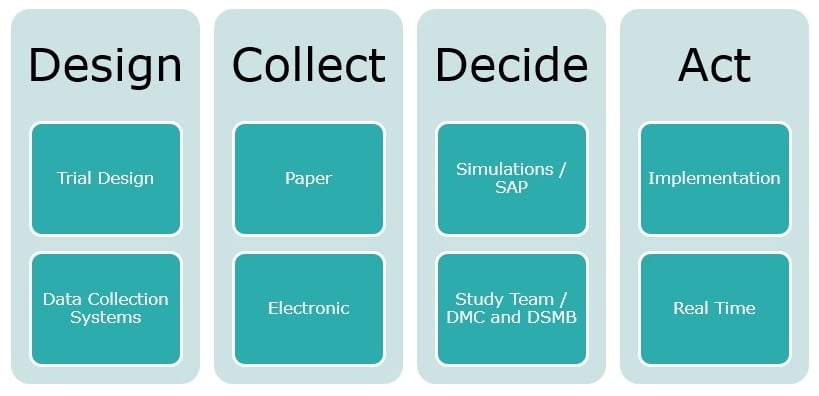

In an adaptive approach there are four key considerations:

At a high-level clinical development teams are investigating the drug efficacy and safety of an IND and in a simple form asking themselves ‘Did the drug hurt? And did it work?’ However there are more questions than just these two, and in the adaptive context they become even more crucial:

- what is the design?

- What data are we trying to collect and how?

- what we are trying to prove and decisions to prove what we are trying to achieve?

- how will we act and implement those decision?

When going down the adaptive route, in fact:

- the design of the study can dictate to what extent we actually can adapt (e.g. if the anticipated sample size already exceeds the operational limit there will be no use in planning for sample size re-estimation)

- the way the endpoint of interested is collected will put limits to the timing of interim analyses: if the study recruits quickly then interim analysis and subsequent stopping decisions might have a small impact whereby most patients might have already completed the study by the time the interim is ‘operationally’ performed.

- it is clear that not all adaptations are as valid as the question they’re trying to answer: changing the primary endpoint because the interim showed better results for another one is the wrong answer to a perfectly valid question (i.e. is the current endpoint the best one to capture treatment effect?)

- the rules and decisions have to be clearly stated, but must allow discussion in the DMC/DSMB: potential safety signals should not be ignored only because the statistical test is significant at the interim stage

In the next section we’re going to present some examples of adaptive designs without delving too much in the statistical complexities of each, but only to highlight the potential that adaptation holds for changing the way single trials (and global development programs) are usually thought of.

Examples of an Adaptive Trial Design

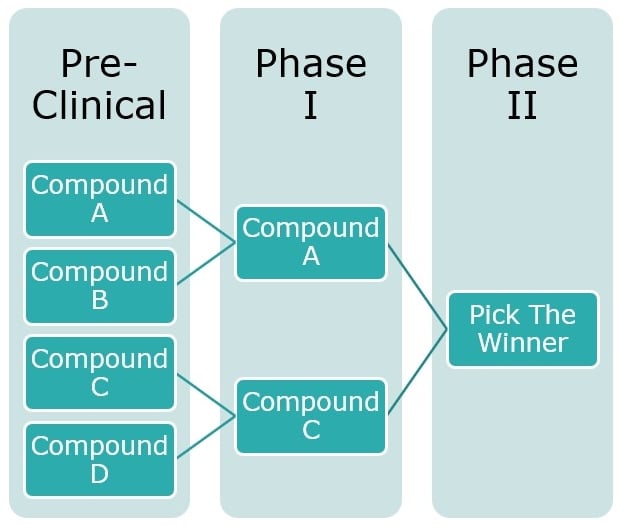

Example 1: Pick the Winner

In the above example there are four compounds in development, with very similar therapeutic features. One product is about to go into its first-in-man studies and two other products are close behind coming out of the lab, however rather than proceeding with multiple independent studies, all compounds development plans are stopped and a new trial is designed which used all four compounds together in alignment and performed a ‘pick the winner’ study, similar to the studies presented in Royston et al [6] and Hills et al [7], where the choice of which drug is to be carried forward to the next stage of development is performed based on pre-planned criteria, usually relying on some surrogate outcome of the primary endpoint (since it might be too early to assess drug effectiveness by analyzing common endpoints e.g. survival). The main advantage of a trial like this is that rather than performing full scale Phase 2 and 3 trials on all compounds only the most promising ones are brought forward, and fewer patients have to be treated with ineffective treatments thereby maximizing the resources that can be used for effective ones.

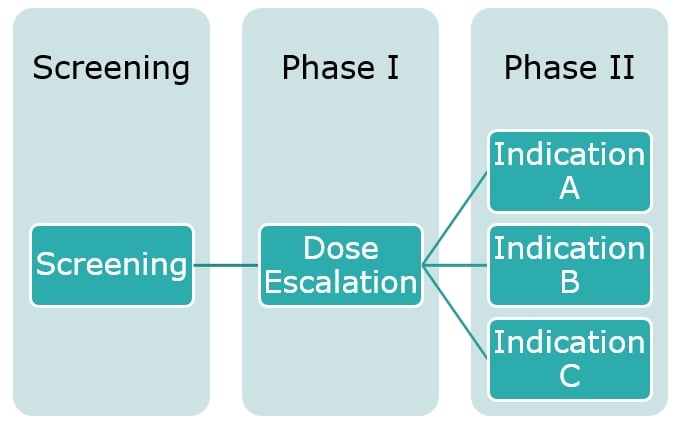

Example 2: An Oncology Clinical Study Design

Within oncology clinical study design it is common to see an approach sometimes referred to as the seamless phase one and phase two. This example refers to a heterogeneous population of solid tumor patients and the initial part was dose escalation but with any type of solid tumor. As the results come through, the treatment was shown to have a higher effect on some tumor types compared to others, and this information can be included in the study design by ‘enriching’ the patient population with an increased number of patients with a tumor falling in these most positively impacted classes and by no longer recruiting patients with a tumor on whom the treatment was shown to either be ineffective or having a limited effect. As the trial then progresses from Phase I into Phase II there’s the potential for better defining cohorts of patients to analyse (based on either indication, dose level or other), and this ultimately leads to a more efficient study with better tailored questions that are defined based on earlier looks at the data rather than after the study’s been completed, opposite to scenarios where additional subgroup analyses are planned post-hoc to rescue the study results.

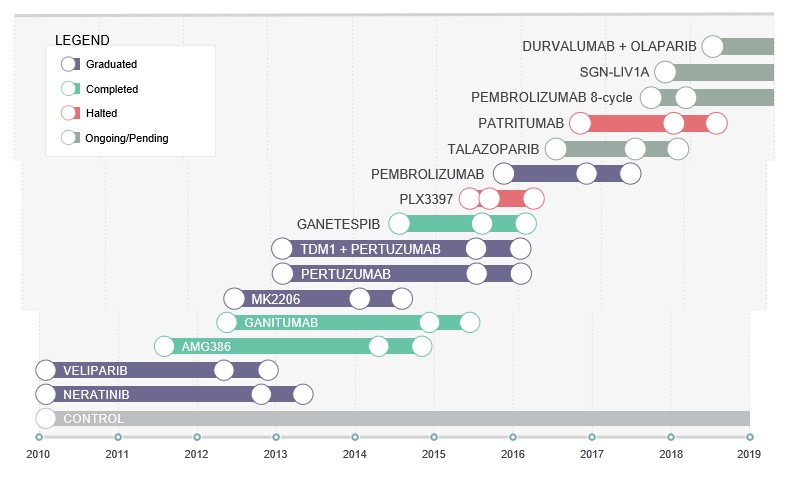

Example 3: I-Spy 2

I-SPY 2 is a clinical trial for women with newly diagnosed locally advanced breast cancer [8]. As of 2019 16 compounds have been used in the study (3 having received accelerated approval) and compared to an historical control arm with now decades of data.

This kind of trial, also referred to as ‘platform trial’[9], is a massive shift in drug development paradigm, and is the result of a large-scale collaboration that aims at breaking boundaries across companies with the view that sharing data on competitor treatment can potentially imply a quicker and more efficient way to prove drug effectiveness. By using the benefits of adaptive randomization [10] patients will be assigned to a given treatment arm based on their breast cancer genetic signature such that drugs that are more effective in that signature will be assigned to these patients with an increased probability. By doing so, a ‘Pick The Winner’-like design is implemented, with the potential (as mentioned above) of more than a single therapy ultimately being regarded as ‘winner’.

The collaboration required to share the information instead of run independent trials is financially very sound for the companies involved as they can learn and use the results from the other companies INDs to shape their own. But it is also a patient centric approach as there is less burden on patients, reduced patient recruitment and all companies are working towards a successful compound as the winning INDs move forward.

Issues and Challenges

Whilst the discussion above appears to be very light-hearted’, adaptive clinical trials are by no means the panacea to all drug development problems. It can be argued that with greater flexibility also come greater problems, and not all the problems can always be foreseen at a planning stage, resulting in an increased chance of a flawed study that won’t be accepted by regulators.

One main concern for regulators is that by allowing for changes after the randomization has taken place, we open the way to a loss of control over the type I error, thus resulting in an increase of false claim of efficacy. Many adaptation strategies have been discussed in literature that show how this problem can be tackled, but they generally require extensive simulation studies to ensure the error is controlled whilst also achieving the ‘efficiency’ goals the study is designed for. This voices the need for companies embarking on an adaptive trial to either involve their biometrics department at the very initial stage of the planning process or seek the help of experienced statisticians to:

1) clarify whether there’s any real benefit in pursuing an adaptive strategy,

2) defining the set of adaptation rules.

Of these, 1) is somehow more critical because ultimately even a carefully planned adaptation will not serve its ultimate purpose (increase efficiency and overall improve study quality) if adapting is not really required. As an example, think of sample size re-estimation: this is often used when there’s little information on anticipated drug effects and/or their uncertainty, but it can’t be a replacement for a careful literature assessment to identify previous similar studies where to extract information on the clinical effect of relevance.

Alongside statistical challenges (for which a variety of reviews exist in literature [11-13]) one main reason for adaptive trials being still not that popular is the general conservatism that permeates the industry, which is also due to fact that an adaptive trial is often seen as an unneeded risk. As a matter of fact, although adaptive methodologies have been out there for quite a while now, there are not that many case studies for these designs having been successfully submitted to regulatory bodies, and as such company might be cautious and rather spend an extra amount of money in a standard design yet have a larger confidence that positive results will still be valued as sufficient for approval.

Conclusion

Adaptive trials are not a solution to all drug development problems, but do provide a novel framework within which the current development programs need to be re-evaluated in the search of ‘better ways’ to achieve the same goal. Despite operational and statistical complexities (and risks) flexible designs are being more and more used by the companies and endorsed by regulators, so that there’s no real barrier preventing at least the adaptive option to be put on the table for discussion. Involving a statistician at this discussion will serve the double purpose of defining whether adaptive rules are really needed in the very first place, and in clarifying their scope thereafter.

Request a Consultation

References

[1] FDA Draft Guidance October 2018. Adaptive Designs for Clinical Trials of Drugs and Biologics

[2] https://www.fda.gov/science-research/science-and-research-special-topics/critical-path-initiative

[3] Gallo P, Chuang-Stein C, Dragalin V, Gaydos B, Krams M and Pinheiro J. Adaptive designs in clinical development – an executive summary of the PhRMa working group. J Biopharm Stat 2006; 16: 275-283

[4] Jennison C, Turnbull B. Discussion of ‘Adaptive designs in clinical development – an executive summary of the PhRMa working group.’ J Biopharm Stat 2006; 16: 293-298

[5] Grieve AP, Krams M. ASTIN: a Bayesian adaptive dose–response trial in acute stroke. Clinical Trials 2004; 2(4): 340–351.

[6] Royston P, Parmar MKB, Qian W. Novel designs for multi-arm clinical trials with survival outcomes with an application in ovarian cancer. Statist Med 2003; 22(14): 2239-2256

[7] Hills RK, Burnett AK. Applicability of a “Pick A Winner” trial design to acute myeloid leukemia. Blood 2011; 118: 2389-2394

[8] https://www.ispytrials.org/i-spy-platform/i-spy2

[9] Esserman L, Hylton N, Asare S, et al. I-SPY2: Unlocking the Potential of the Platform Trial. In Z. Antonijevic & R. A. Beckman (Eds.), Platform Trial Designs in Drug Development: Umbrella Trials and Basket Trials (Chapman and Hall/CRC); pp. 3-22, 2018.

[10] Berry, D.A. Bayesian clinical trials. Nat. Rev. Drug Discov. 5, 27–36 (2006).

[11] Kairalla JA, Coffey CS, Thomann MA, Muller KE. Adaptive trial designs: a review of barriers and opportunities. Trials 2012; 13(article 145)

[12] Cerqueira FP, Jesus AMC, Cotrim MD. Adapative Design: A Review of the Technical, Statistical, and Regulatory Aspects of Implementation in a Clinical Trial. Ther Innov Regul Sci. 2019 Feb 27:2168479019831240. doi: 10.1177/2168479019831240.

[13] Chow SC, Corey R. Benefits, challenges and obstacles of adaptive clinical trial designs. Orphanet J Rare Dis. 2011; 6: 79.